How I Used AI to Decode 2,300 Disneyland Reviews and Plan the Perfect Visit

Using AI and NLP to Uncover the Truth About Shanghai Disneyland

Planning a trip to Shanghai Disneyland sounded exciting — until I faced over 2,300 TripAdvisor reviews. Who has time to sift through endless complaints about food, long queues, and staff friendliness to decide which rides are worth it? With the help of AI, I decided to take matters into my own hands.

With all the hype around LLMs, I started thinking about how to use them to solve my real-world pain points — real-world TripAdvisor reviews and learning LLMs simultaneously.

In this article, I’ll explain how I built an AI-powered system that scraped, categorized, summarized, and analyzed thousands of reviews to uncover valuable insights. Whether you’re curious about using AI for a personal project or want to learn more about NLP techniques, this is for you.

Table of Contents:

Why Reading 2,300 Reviews Is a Nightmare

Your Travel Critic Tells You What’s Good and What’s Bad

How GPT Summarized Reviews Like a Pro

A Recommendation System That Understands You

Challenges

Conclusion

Why Reading 2,300 Reviews Is a Nightmare?

When I skimmed through the first page of Shanghai Disneyland reviews, I struggled to read through every customer’s long and unorganized personal vlog.

One review discusses the rides, another rants about the magic showtime, and the third complains about food and staff.

Reviews are generally long, unorganized, and need more personalization to fit everybody’s needs. After reading a handful of reviews, we feel overloaded and need help to see the overall trends of the reviews beyond the overall rating score.

I decided to build something to help tackle my and potentially yours review pain points.

As a self-respecting novice who has never built LLM models, I turned to ChatGPT for guidance.

The first step I need to complete is to scrape TripAdvisor’s Shanghai Disneyland reviews page.

To scrape TripAdvisor, I used the ScraperAPI tool to bypass IP blocking. Here’s the code that extracts review text and ratings:

#Find all divs with class '_c' as the starting point for reviews

all_text_blocks = soup.find_all('div',class_='_c')

for block in all_text_blocks:

#Extract review text

review_text_span = block.find('span', class_='JguWG')

review_text = None

if review_text_span:

nested_text = review_text_span.find('span', class_='yCeTE')

if nested_text:

review_text = nested_text.get_text(strip=True)

#extract rating score

rating_blocks=block.find('svg', class_='UctUV d H0')

if rating_blocks:

rating_text=rating_blocks.find('title').text.strip()

rating_score = float(rating_text.split(" ")[0]) # Convert to floatYour Travel Critic Tells You What’s Good and What’s Bad

Isn’t it great to view reviews based on specific aspects and check reviews theme by theme? ABSA precisely gives you a high-level overview of what’s good and what’s not per aspect.

Imagine ABSA as your food critic who tastes restaurant food and tells you what part is good and what is not. Instead of saying things like, “This pizza is nice,” ABSA says, “The pizza crust is great, but the toppings are bland.”

That’s what I set out to do.

Let’s look at the review data; it contains two columns — review and rating.

I defined the aspects that I am interested in for this review data:

ride quality

cleanliness

staff friendliness

food quality

wait times

Now, it’s time to pick a model to complete this task. I decided on distilbert-base-uncased-finetuned-sst-2-english.

Imagine it as a super-intelligent student who studies English very well. This student also got extra training to determine whether a sentence is sad(negative) or happy(positive).

What sets it apart from other smart students is that it studies only the most essential things, which makes it smaller and faster.

sentiment_pipeline=pipeline("

sentiment-analysis",

model="distilbert-base-uncased-finetuned-sst-2-english",

tokenizer="distilbert-base-uncased-finetuned-sst-2-english",

device=0 # Change to -1 if you want to force the CPU

)For each review, the model predicted the sentiment as either positive or negative:

At first glance, the bar graph shows that more people had positive experiences than negative ones. Still, quantifying the numbers using percentages shows that almost 40% of people had terrible experiences in all the areas I am interested in.

Er, that doesn’t sound good!

People complain about wait time, food quality, staff friendliness, and cleanliness. Everything about this park!

But what were people saying about the park that they didn’t enjoy?

That brings us to the next part — Review Summarization.

How GPT Summarized Reviews Like a Pro

GPT is like your super smart friend who can read many reviews and summarise the most critical points—like whether the taco is good.

To use the GPT model, you must purchase credits on the website and use your dedicated API key to enable the model to complete the summarization.

def summarize_with_gpt(text, api_key):

# Initialize OpenAI client

client = OpenAI(api_key=api_key)

# Make a chat completion request

response = client.chat.completions.create(

model="gpt-4", # Use "gpt-4" or "gpt-4-turbo" for best performance

messages=[

{'role': "system", "content": "You are a helpful assistant that summarizes user reviews."},

{"role": "user", "content": f"Summarize this review: {text}"}

],

max_tokens=150 # Adjust this based on your needs

)

return response.choices[0].message.contentI have encountered some challenges using the GPT model. For instance, summarising my review data takes quite a long time, especially when some reviews are very long. The longer it takes, the more credits it consumes!

I went with batch processing and summarised the first 100 reviews as a starting point.

# Batch processing and saving function

def process_and_save(df, api_key, batch_size=10):

for i in range(0, len(df), batch_size):

batch = df.iloc[i:i+batch_size] # Process the current batch

# Process only rows without summaries

for index, row in batch.iterrows():

if pd.isna(row['summary']):

df.at[index, 'summary'] = summarize_with_gpt_safe(row['review'], api_key)

# Save progress incrementally

updated_rows = df.iloc[i:i+batch_size] # Get only the rows in this batch

updated_rows.to_csv(

'reviews_with_summaries.csv',

mode='a', # Append to the existing file

index=False,

header=(i == 0) # Include header only for the first batch

)

print(f"Batch {i // batch_size + 1} saved at {time.strftime('%Y-%m-%d %H:%M:%S')}")Using my first 100 reviews, I went on to visualize the most common words people were mentioning in the reviews:

People mentioned “queue, food,” probably reflecting the negative sentiment we saw earlier. “App, show, pirates, Tron” was also mentioned, which made me download the Shanghai Disneyland app, decide on a particular ride, such as the Pirates of Caribean and Tron, and check the time for their shows, especially the fireworks display!

I am still deciding whether to buy the fast pass, which seems a must since so many people complain about long queues.

I also checked the 20 most commonly used words, which is consistent with some of WordCloud’s results:

Up until this point, I have decided on my Shanghai Disneyland strategy: go early in the morning, pack some lunch, check the show’s time on the app, go on at least the Pirates of Caribean and Tron rides, buy a fast pass if necessary, and make sure we stay for the fireworks!

A Recommendation System That Understands You

The good thing about a recommender system for reviews is that you can only read reviews tailored to your needs.

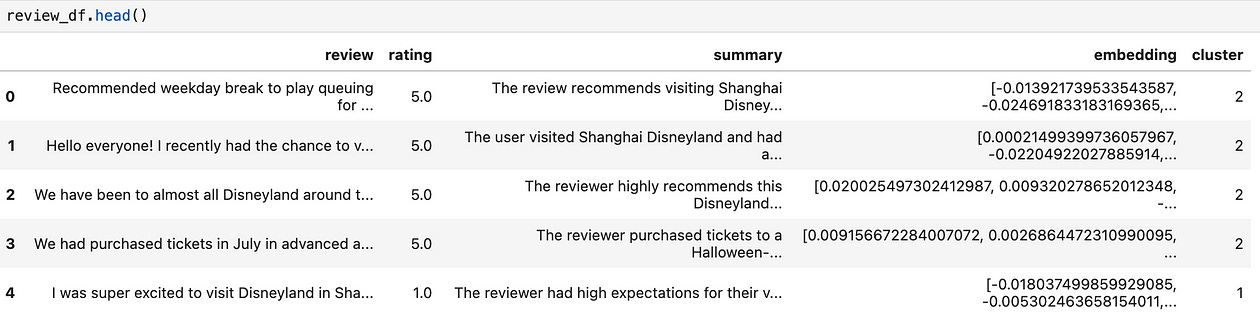

Using the summarized review data, I translated the reviews into embeddings — Think of it as the robot’s way of understanding human language. They turn sentences into numbers that capture the essence of what’s being said, like translating emotions into math.

Then, I used KMeans clustering to group those robots’ language into meaningful groups. It’s like sorting your Lego pieces by color — each cluster groups similar reviews based on what they talk about.

To make it even cooler, I built a web interface with Streamlit — like a magical portal where you can type in what you’re looking for, like “family rides with little wait time,” and hooray! The system fetches reviews tailored to your wish. Behind the scenes, it’s like the toy shop reading your request, turning it into a robot’s language, figuring out which section (cluster) it belongs to, and pulling out the perfect recommendations.

Want to see it yourself, check it out here:

Challenge

Three main challenges I’ve encountered during this project:

The HTML structure of TripAdvisor was complex and inconsistent. After a lot of trial and error, I discovered the right tags to extract reviews reliably.

Compiling reviews into short summaries using the GPT model took a long time. Each review is typically lengthy and unorganized, and compressing them into short summaries requires an extended processing time.

Deploying the Steamlit web application and testing it with real user input took a long time. I had to spin off the model and go through all the steps to get it working. Instead, I saved those models and datasets and used them to get the app up and running quickly.

Conclusion

I started this project with the frustration as a parent planning a Disneyland trip. But it ends with a valuable tool that transforms overwhelming reviews into actionable insights.

What’s your real-world struggle, and could you use AI to solve it? Whether you’re planning a trip, brainstorming social media content ideas, or different problem, I encourage you to explore building something with AI.